We're not afraid of AI. We're afraid of executives.

As a former CNET employee told The Verge: "Everyone at CNET is more afraid of Red Ventures than they are of AI.”

I had a whole plan for this post about artificial intelligence. Sure, AI is posing a very dangerous threat to many industries, including journalism, but it’s not the technology that’s the problem. It’s just another reason for executives to get rid of workers they deem redundant or unnecessary.

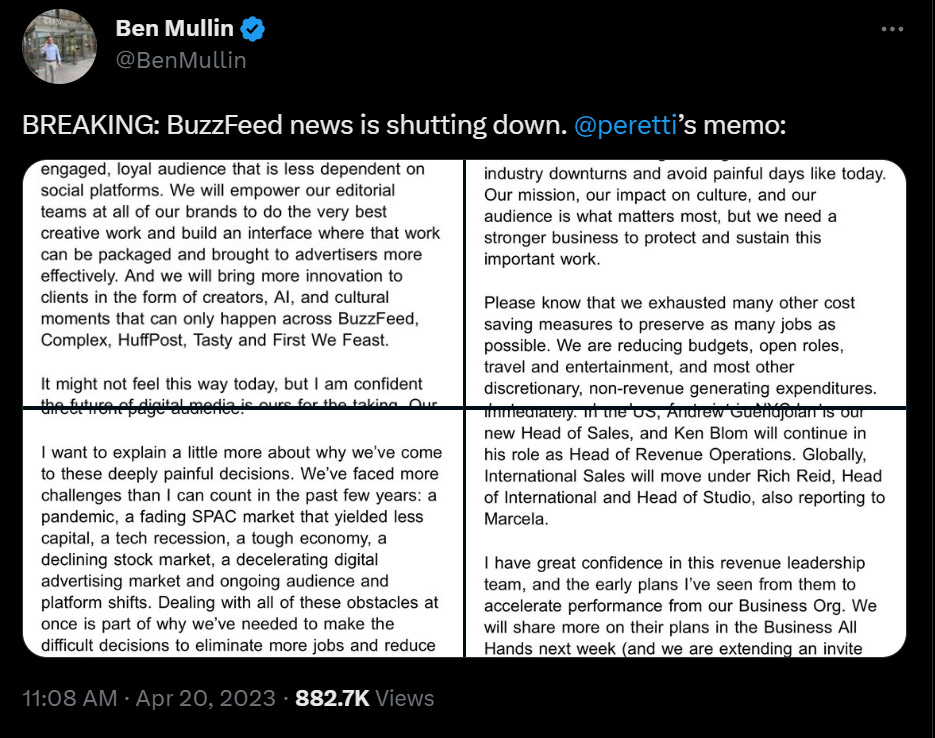

And then late last week, Insider and Buzzfeed announced massive layoffs; Insider is laying off 10% of its “team” (unclear if this is just from the newsroom or across other departments) and Buzzfeed is shutting down Buzzfeed News, its investigative journalism arm, entirely and laying off 15% of staff.

I had been writing this newsletter for weeks and they just… tweeted it out.

(And because of Muskrat’s API rules, you’ll see screenshots of the memos from Twitter instead of embedded tweets.)

The Buzzfeed News news is especially worrisome. Everybody makes fun of Buzzfeed proper since it’s the arbiter of modern-day clickbait, articles made up of stolen tweets, and timely, nonsensical quizzes, but Buzzfeed News had produced excellent reporting over the years, including a 2021 Pulitzer Prize-winning report on Muslim detention in China. But now, according to Peretti’s memo, it’ll be investing more in “creators” and AI enhancements in the “sales process.”

But this is also not the first time Buzzfeed News has seen massive cuts due to the whims of executives. In 2019, its national desk and national security teams, among others, were gutted by layoffs. And as I’ve noted before, Buzzfeed seems to have layoffs every couple of years. But a lot of these recent eliminations seem to be tied to two things: the looming economic recession that has caused executives to focus on short-term profitability to the detriment of journalists and AI. Or, at the very least, AI seems to be related.

A week ago, Insider editor-in-chief Nich Carlson sent out a memo concerning the business’s interest in AI, saying it will make people across the newsroom “better editors, reporters, and producers” after it made him a better leader by seemingly doing his job for him. He did spend multiple paragraphs talking about the risks of using AI, but then he added that people can “experiment” (the key word here since “implementing” it would’ve been too controversial) with using it to check for spelling errors, recommend SEO-efficient headlines, and explain “tricky” concepts, even though AI like ChatGPT has a habit of pushing out incorrect information and plagiarism.

You can see something similar over on Buzzfeed, with CEO Jonah Peretti writing in a memo earlier this year about how the company will be using OpenAI tools to generate AI-powered quizzes, “enhancing the quiz experience, informing our brainstorming, and personalizing our content for our audience.” Employees understandably raised concerns about the move, with one asking if it would lead to a reduction in the workforce. According to the Wall Street Journal:

“Mr. Peretti told staff during the meeting that digital-media companies that choose to rely on AI solely to save costs and produce low-quality content were making a terrible use of the technology, according to the spokeswoman. That isn’t BuzzFeed’s approach, Mr. Peretti said, noting there were far more creative ways to use AI.”

You can see why people are extra worried about AI’s usage in journalism. So, yes, this is going to be a newsletter about AI tools, but it’s going to be about executives even more. Journalism is not a profitable industry, and journalism is not a product to be commoditized. It’s always been about long-term impact rather than short-term success, which is why our current AI boom is not the first time tech, innovation, or just a stupid business decision has broken up the process and threatened jobs.

AI tools can be super helpful for journalists, but they’re also great for executives who want to placate anxious investors. Why keep writers or editors on when AI can supposedly do all the work itself?

As a former CNET employee told The Verge: “They do not fear AI more than they fear the numerous layoffs Red Ventures has insisted upon… Everyone at CNET is more afraid of Red Ventures than they are of AI.”

I worked in copyediting and web production for around eight years, partly because coming out of college, I thought leaning into digital media rather than traditional reporting would be better for my stability and my anxiety. In a way, that was true. I knew about social media, SEO, and CMS production long before it was a requirement for every journalist. But it also gave me a front seat to watching the internet slowly throw away the copyeditor as an integral part of a newsroom.

In the mid-2010s, I was at a newspaper that was looking to do away partially with its copyediting desk and transition it into a multiplatform production one. There were still copyeditors for the paper itself, but their responsibilities would now include working in the CMS to do web production. This sort of makes sense from a workflow standpoint since it would allow completed stories to get online faster, but to force new technologies on sometimes veteran editors is stressful enough, let alone watching your department get turned around for some strategy you don’t understand.

Similarly, in 2017, the New York Times said it would streamline its editing process, and after deliberating, decided that eliminating the entire copy desk was the best way to go. That meant 100 editors would lose their jobs and be asked to apply to 50 open positions. This caused a shitstorm inside the NYT and led to hundreds of employees walking off the job for 15 minutes — which until G/O Media’s five-day strike in 2022 (or, most recently, Insider walking out in response to the aforementioned layoffs) was unheard of in media. In a letter sent to Executive Editor Dean Baquet and Managing Editor Joseph Kahn, the copy desk stressed its importance while also breaking down the nonsense it had dealt with over the past year and a half. They were “finding it difficult to feel respected” after having their jobs disrespected.

The full letter is worth reading, and it’ll feel familiar to anybody who’s dealt with the impending doom of newsroom layoffs. But I want to highlight the end of the letter (emphasis mine):

You may have heard that the elimination of the copy desk is widely seen as a disaster in the making (including by many managers directly involved in the process), that the editing experiments were an open failure, and that there is dissension even in the highest ranks and across job titles regarding the new editing structure.

But you have decided to press forward anyway, and this decision betrays a stunning lack of knowledge of what we do at The Times. Come see what we do. See the process, what comes in and what actually goes online or to print. See what we do before you decide you can live without it.

As a former copy chief, I experienced a lot of ignorance regarding what I do, both in terms of writing and in my editing. It doesn’t help that AI tools like Grammarly, which purports to improve your writing but really only does so half the time, can do some of the copyediting work for the writer. It’s decent for catching spelling errors and the occasional grammar mistake, but it’s not going to make your writing pristine or account for every tone a writer can take or situation. But I still saw a former colleague after my layoff — along with the rest of the copyediting department — say that Grammarly is an alright alternative anyway.

Something similar is happening with AI. While journalists have been using AI tools for a while now, they’ve applied them in situations where automation could actually be useful. The Associated Press has happily touted using AI for putting reports together on financials and sports scores, things nobody likes to do. Instead, journalists can focus on writing up actual stories instead of tallying up numbers.

However, as we’re seeing with sites like CNET and Buzzfeed, using AI has done more harm than good. I reached out to Jon Christian, who’s been reporting on the AI in journalism boom at Futurism. The publication was one of the first to report on CNET’s quiet move to using AI for financial articles. He said they first started investigating after a Twitter user noticed CNET articles had a disclaimer “This article was generated using automation technology and thoroughly edited and fact-checked by an editor on our editorial staff.” Two months of articles had been written by AI, a fact that became unfortunately apparent once Futurism started noticing extensive plagiarism. Since machine learning algorithms can only spout out, you know, what it has learned from ingested information, it’s of course risky to use when you have to produce original content.

Then, the publication found that Buzzfeed was using AI to generate travel articles. They figured this out partially by just looking at them and realizing they used a lot of the same language ad nauseam.

Yet, despite this bad press, media companies continue to want to apply the technology. From what I heard, top-brass executives continue to talk about it behind the scenes.

“I was shocked that CNET's leadership thought this tech was mature enough to roll out publicly. Anyone with a passing familiarity with current AI tech — nevermind its capabilities back in November — knows that it's somewhat impressive, but also hugely prone to factual mistakes, bias, and other shortcomings that make it ill-suited for journalism, Christian said.

And if Buzzfeed and Insider, among many other publications, continue to rely more heavily on AI, it’ll affect their bottom line in the long term. But what is profitability anyway? During its Q4 2022 financial report, Buzzfeed revealed it had grown 10% since 2021. But the problem is that’s not profitable enough, and that’s not going to help stock prices now.

Like NFTs before it, AI will continue to be adopted by various industries like media until it fizzles out. It’ll either be replaced by something shinier or, in the more optimistic view, will be relegated to tasks that it can do reliably.

“There’s also probably a more optimistic future you can imagine, in which publishers like CNET take a deep breath and let the tech mature — and give everyone a chance to figure out its strengths and limitations — before spinning it up as a way to generate tons of cheap content,” Christian added. “Maybe then we could find ways that AI could provide valuable new tools for quality journalism.”

But there’s a lot of money in AI right now, and what better way to get people who are really into money excited than to use the faddiest emerging tech?

That’s not going to help journalists, who are losing out to a machine that just copies what you tell it to. And it’s not going to stop a system that cares more about the bottom line than doing the work it’s supposed to do.